Heard of the “Gutenberg parenthesis”? This is the intriguing proposition that the era of mass consumption of text ushered in by the printing press four centuries ago was a mere interlude between the previous era of predominantly oral culture and a new digital-oral era on whose threshold we may now sit.

Heard of the “Gutenberg parenthesis”? This is the intriguing proposition that the era of mass consumption of text ushered in by the printing press four centuries ago was a mere interlude between the previous era of predominantly oral culture and a new digital-oral era on whose threshold we may now sit.

That’s a fascinating debate in itself. For the moment I just want to borrow the “parenthesis” concept — the idea that an innovative development we are accustomed to viewing as a step up some progressive ladder may instead be simply a temporary break in some dominant norm.

What if the “open Web” were just this sort of parenthesis? What if the advent of a (near) universal publishing platform open to (nearly) all were not itself a transformative break with the past, but instead a brief transitional interlude between more closed informational regimes?

That’s the question I weighed last weekend at Open Web Foo Camp. I’d never been to one of O’Reilly’s Foo Camp events — informal “unconferences” at the publisher’s Sebastopol offices — but last weekend had the pleasure of hanging out with an extraordinary gang of smart people there. Here’s what I came away with.

For starters, of course everyone has a different take on the meaning of “openness.” Tantek Celik’s post lays out some of the principles embraced by ardent technologists in this field:

- open formats for freely publishing what you write, photograph, video and otherwise create, author, or code (e.g. HTML, CSS, Javascript, JPEG, PNG, Ogg, WebM etc.).

- domain name registrars and web hosting services that, like phone companies, don’t judge your content.

- cheap internet access that doesn’t discriminate based on domains

But for many users, these principles are distant, complex, and hard to fathom. They might think of the iPhone as a substantially “open” device because hey, you can extend its functionality by buying new apps — that’s a lot more open than your Plain Old Cellphone, right? In the ’80s Microsoft’s DOS-Windows platform was labeled “open” because, unlike Apple’s products, anyone could manufacture hardware for it.

“Open,” then, isn’t a category; it’s a spectrum. The spectrum runs from effectively locked-down platforms and services (think: broadcast TV) to those that are substantially unencumbered by technical or legal constraint. There is probably no such thing as a totally open system. But it’s fairly easy to figure out whether one system is more or less open than another.

The trend-line of today’s successful digital platforms is moving noticeably towards the closed end of this spectrum. We see this at work at many different levels of the layered stack of services that give us the networks we enjoy today — for instance:

- the App Store — iPhone apps, unlike Web sites and services, must pass through Apple’s approval process before being available to users.

- Facebook / Twitter — These phenomenally successful social networks, though permeable in several important ways, exist as centralized operations run by private companies, which set the rules for what developers and users can do on them.

- Comcast — the cable company that provides much of the U.S.’s Internet service just merged with NBC and faces all sorts of temptations to manipulate its delivery of the open Web to favor its own content and services.

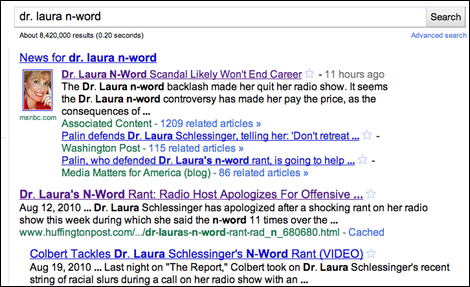

- Google — the big company most vocal about “open Web” principles has arguably compromised its commitment to net neutrality, and Open Web Foo attendees raised questions about new wrinkles in Google Search that may subtly favor large services like Yelp or Google-owned YouTube over independent sites.

The picture is hardly all-or-nothing, and openness regularly has its innings — for instance, with developments like Facebook’s new download-your-data feature. But once you load everything on the scales, it’s hard not to conclude that today we’re seeing the strongest challenge to the open Web ideal since the Web itself began taking off in 1994-5.

Then the Web seemed to represent a fundamental break from the media and technology regimes that preceded it — a mutant offspring of the academy and fringe culture that had inexplicably gone mass market and eclipsed the closed online services of its day. Now we must ask, was this openness an anomaly — a parenthesis?

My heart tells me “no,” but my brain says the answer will be yes — unless we get busy. Openness is resilient and powerful in itself, but it can’t survive without friends, without people who understand it explaining it to the public and lobbying for it inside companies and in front of regulators and governments.

For me, one of the heartening aspects of the Foo weekend was seeing a whole generation of young developers and entrepreneurs who grew up with a relatively open Web as a fact of life begin to grapple with this question themselves. And one of the questions hanging over the event, which Anil Dash framed, was how these people can hang on to their ideals once they move inside the biggest companies, as many of them have.

What’s at stake here is not just a lofty abstraction. It’s whether the next generation of innovators on the Web — in technology, in services, or in news and publishing, where my passion lies — will be free to raise their next mutant offspring. As Steven Johnson reminds us in his new book, when you close anything — your company, your service, your mind — you pay an “innovation tax.” You make it harder for ideas to bump together productively and become fertile.

Each of the institutions taking a hop toward the closed end of the openness spectrum today has inherited advantages from the relatively open online environment of the past 15 years. Let’s hope their successors over the next 15 can have the same head start.