A week ago Wednesday I traveled to Albany, N.Y. at the kind invitation of some professors at the College of St. Rose. (Thanks, Cailin Brown and Dan Nester!)

A week ago Wednesday I traveled to Albany, N.Y. at the kind invitation of some professors at the College of St. Rose. (Thanks, Cailin Brown and Dan Nester!)

Turns out that Say Everything is being used as a text in a half-dozen classes at that school. As part of the college’s participation in the National Council of Teachers of English’s National Day on Writing, and also part of a cool writer’s series called Frequency North, St. Rose asked me to come talk about blogging and writing. Which I always love to do.

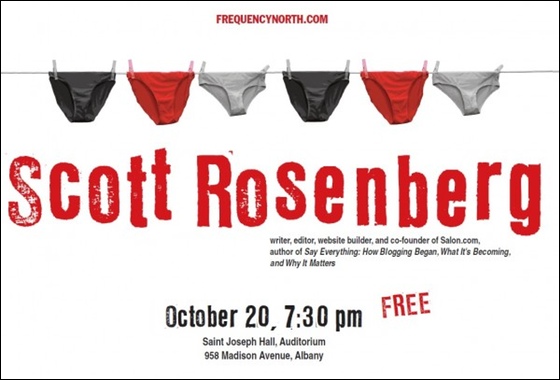

The poster for the talk, reproduced above, caused me to do a doubletake, which I’m sure was the point. I’m slow, sometimes, so it took me a minute before I registered the “hanging your laundry in public” concept. Nice work, and probably the one and only time my name will share a billboard with panties.

Anyway, I had a great day at St. Rose talking with students and faculty and chatting on the local public radio affiliate.

The college has posted a complete video of the talk. (Or here’s just a three-minute taste of the audio, with some optimistic observations on the concept of information overload.) Also, I worked from pretty extensive notes, and I’ve cleaned them up, filled them out a bit and posted them on a separate page. Here it is — Large Blocks of Uninterrupted Text: A Talk on Blogging and ‘Say Everything.’

This is a pretty extensive update on the blogging talk that I was giving back when Say Everything first came out. I start with the Onion, proceed to the death of culture, and discuss the rise of blogging just a bit. Then I use the remarkable saga of Joey DeVilla the Accordion Guy and his New Girl — a story that didn’t make it into Say Everything — as a way to discuss a whole series of critiques of blogging and online discourse along some familiar vectors: truth and trust; anonymity and civility; serendipity; narcissism; shallowness and substance; attention and overload.